Cleora part 1: Graph Embeddings

A Guide to Cleora: Efficient Embedding of Heterogeneous Data

In the realm of data science, understanding relationships among entities is critical. Cleora shines in this context by efficiently modeling relationships in heterogeneous relational data. To grasp how Cleora operates, it’s pivotal to first understand the concepts of graphs and hypergraphs.

1. Graphs vs Hypergraphs

-

Graphs consist of vertices (or nodes) interconnected by edges. In a relational table context, the vertices may represent unique entities, like users or products, while the edges represent their relationships. For example:

- Entities: John, Mary, Xavier, A, B, C

- Relationships: (John, A), (Mary, C)

-

Hypergraphs, however, extend this concept. They can represent relationships involving multiple vertices. In an example, treating customers as hyperedges and products as vertices enables grouping products by customers. Such hyperedges showcase relationships among three vertices at once, illustrating the enhanced complexity of hypergraphs.

Hypergraph Expansion

For Cleora to operate, it must convert these hyperedges into standard edges, a process called hypergraph expansion. Two strategies are often used:

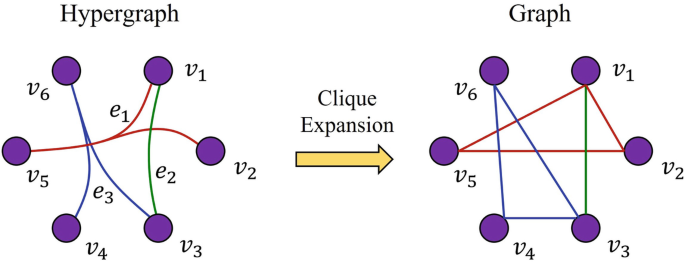

- Clique Expansion: Here, each hyperedge is transformed into a clique, meaning every pair of nodes in a hyperedge is directly connected. While this can create many edges, it offers a more nuanced representation of relationships.

Source: Springer

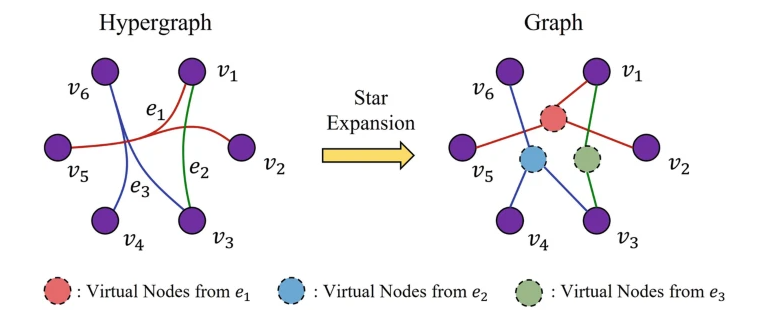

- Star Expansion: A simpler strategy where a new node connects to all original nodes in a hyperedge, saving on the number of edges created but still preserving relationships.

Source: Springer

2. Cleora Embeddings

Cleora’s embeddings are designed to be fast and efficient, differentiating themselves from embeddings generated by popular systems like Node2vec or DeepWalk. Here are some notable features:

Key Technical Features

- Efficiency: Cleora is remarkably faster, offering performance two orders of magnitude ahead of traditional approaches.

- Inductivity: Embeddings are generated on-the-fly based on interactions, so new entities can be added seamlessly.

- Stability: Deterministic starting vectors mean that embeddings remain consistent even across different runs, which is a challenge with other algorithms.

- Cross-dataset Compositionality: Cleora allows for embeddings of the same entity across datasets to be combined effectively through averaging.

- Dim-wise Independence: Each dimension in an embedding is independent, which facilitates efficient combination with multi-view embeddings, especially in conjunction with Conv1D layers.

- Extreme Parallelism: Built in Rust, Cleora leverages thread-level parallelism for most computational tasks, enhancing speed.

Key Usability Features

From a user perspective, Cleora provides:

- Direct embedding of heterogeneous relational tables without pre-processing.

- Ease in embedding mixed datasets involving both interactions and text.

- No cold start problems for new entities.

- Real-time updates on embeddings without additional infrastructure.

- Immediate support for multi-view embeddings and stability across temporal, incremental embeddings.

3. The Algorithm

Cleora operates as a robust multi-purpose model, designed to efficiently learn stable and inductive entity embeddings from a diverse array of data structures, including:

- Heterogeneous undirected graphs

- Heterogeneous undirected hypergraphs

- Text and categorical data

Cleora’s Input

Cleora functions optimally with relational tables containing varied entity types. For instance, a table may consist of user IDs and URLs visited, and can include categorical columns with either single or multiple values. The core data structure underlying Cleora is a weighted undirected heterogeneous hypergraph, enabling complex relationships between nodes without directional constraints.

Graph Construction

The graph construction begins with the creation of a helper matrix and proceeds through several steps:

- Creation of a sparse matrix to represent different relationships among nodes.

- Parallel processing to optimize the creation and updating of these matrices.

- Using hashing techniques for efficient storage of identifiers.

Training Embeddings

The trained embeddings represent entities within the graph. The process includes:

- Initialization of an embedding matrix.

- Iterative multiplication involving the sparse matrix.

- L2-normalization to maintain consistency.

- Parallel execution to accelerate the training process.

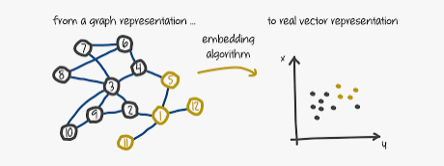

Source: nebula-graph

Memory Considerations

Efficient memory usage is critical, particularly through the use of custom sparse matrix structures that save space by storing only nonzero entities.

4. Use Cases of Cleora Embeddings

Cleora’s efficient approach to embedding heterogeneous data opens up a plethora of use cases across various domains. Below, we explore some common applications where Cleora embeddings can bring significant value:

Recommender Systems

- Collaborative Filtering: Cleora’s embeddings can enhance the performance of recommender systems by efficiently capturing the interactions between users and items, even when dealing with sparse or cold-start scenarios. Its ability to inductively add new users or items allows for dynamic updates and tailored recommendations without retraining the entire model.

- Content-Based Recommendations: By utilizing multi-view embeddings, Cleora can incorporate text and user interaction data to generate highly personalized recommendations.

Fraud Detection

- Anomaly Detection: In scenarios such as credit card fraud detection, Cleora embeddings can identify anomalous patterns in user behavior by efficiently modeling the relationships between users and transactions, highlighting deviations from typical user profiles.

- Transaction Networks: Cleora is well-suited for embedding transaction networks where entities are connected through complex multi-faceted relationships, helping detect suspicious patterns indicative of fraudulent activities.

Social Network Analysis

- Community Detection: By converting social networks into graph or hypergraph structures, Cleora can identify social circles or communities within a vast network, aiding in understanding group dynamics and key influences.

- Influence Propagation: The stability and consistency of Cleora embeddings can support influence estimation in social networks, allowing for precise modeling of information spread among users.

Biological Network Analysis

- Protein-Protein Interactions: In bioinformatics, Cleora can model complex interaction networks of proteins, helping to predict novel interactions and understand mechanisms of diseases.

- Genetic Networks: Cleora’s ability to handle hypergraphs allows for the exploration of interactions among genes, environmental factors, and diseases, advancing research in personalized medicine.

In summary, Cleora embeddings offer a versatile and scalable solution for modeling and analyzing complex relationships in diverse datasets. Its speed, stability, and compatibility across different data types make Cleora a valuable tool for tackling real-world challenges in numerous data-driven applications. In the next article we are going to talk about Pycleora, a python-package to use it. Let’s focus on the use case recommender systems and see how to create user’s embeddings.